Je bent verantwoordelijk voor de dagelijkse leiding op de bouwplaats, bewaakt planning, kwaliteit, veiligheid en kosten en stuurt onderaannemers en bouwplaatsmedewerkers aan. Je bent het aanspreekpunt voor opdrachtgevers en projectteams.

From data to answers: what you need for Generative AI

A chatbot that searches through technical manuals in seconds. Software that automatically analyzes quality reports. Systems that can answer complex questions without you needing to know exactly where the information lives. Generative AI can feel almost magical, but successful implementation starts with solid groundwork.

The technology is here, the possibilities are vast, and tools like ChatGPT, Copilot, and Gemini have made AI a household topic. Yet many organizations still struggle to move from experimentation to practical, reliable use.

Machine learning and generative AI

Generative AI creates new content based on existing data. Unlike traditional AI systems that classify or detect patterns, generative models can write text, generate images, or produce code.

At the heart of most generative AI solutions are Large Language Models (LLMs). These models are trained on enormous amounts of text data and can understand and produce human language. Think of tools like ChatGPT, Google Gemini, or Microsoft Copilot. They “understand” context, can reason through a question, and formulate natural-language answers.

The key difference from classical machine learning? Traditional models are trained for a single, specific task, such as predicting machine failures. LLMs, on the other hand, can handle multiple tasks: answering questions, summarizing documents, writing code, or analyzing reports.

Practical applications of generative AI

The true power of generative AI becomes clear when working with unstructured data. Traditional systems struggle with the nuances of language and format, LLMs thrive on them.

- Knowledge management is a clear starting point. Instead of spending twenty minutes searching for the right machine setting, you can simply ask a system trained on your company’s technical manuals, reports, and troubleshooting documents. The answer arrives in seconds, with source references for validation.

- Quality control benefits too. An LLM can analyze and summarize thousands of inspection reports, identify recurring patterns, and suggest improvements. It doesn’t replace human expertise, it amplifies it by speeding up data-driven insights.

- Semantic search takes it a step further. Traditional search tools look for exact words. Semantic search understands meaning. If someone types “screen not working,” it also finds “no power” or “display remains black.” That’s a big leap forward for troubleshooting and knowledge sharing.

- Software development is another area where LLMs shine. They can write code, find bugs, and even generate documentation. Developers use them as intelligent assistants, available anytime, answering questions in real time.

Success starts with data

To make generative AI work, data is your foundation. Not just its quality, but how it’s organized and accessed.

For many organizations, internal chatbots are an attractive entry point. These systems, powered by Retrieval Augmented Generation (RAG), can answer process-related questions using internal data. But for that to work, the system needs access to all relevant documents, often scattered across SharePoint sites, folders, and legacy spreadsheets.

Before training any model, you need to know where your data lives and how to make it accessible. Then come the permissions. A well-designed RAG system should only give employees access to the information they’re authorized to see. That means rethinking your entire information architecture, from financial document restrictions to operational data flows.

And of course, privacy and security remain crucial. Which data can safely connect to external APIs like OpenAI or Google? Which information must stay inside your own environment? How do you prevent sensitive company data from ending up in an unintended response?

Key considerations for implementation

Costs are often manageable. Each API call to ChatGPT or Gemini costs only a few cents, negligible compared to the hours saved in manual information searches.

Evaluation, however, is more complex. How do you measure whether a model’s output is “good”? Traditional AI models can be scored by accuracy; generative AI requires human evaluation. Some organizations use another model to cross-check results, while others rely on expert reviews or sampling methods.

Scalability introduces new challenges. When dealing with a few dozen queries a day, manual review is feasible. When scaling to thousands, you need automated quality checks and a clear understanding of which errors are acceptable, and which are not.

For high-risk use cases, extra caution is essential. A small error in a maintenance guide is inconvenient; in safety procedures, it can be critical. Classify your use cases by risk and design your controls accordingly.

Where to start

Start with a specific use case, not the technology itself. Many companies begin with the idea that they “should do something with AI”, only to discover that their problem doesn’t actually need a language model.

If your process extracts data from fixed-format documents, pattern matching might be faster and cheaper. LLMs are most effective when dealing with unstructured data, situations where you’re searching for insights you can’t easily define in advance.

Begin small and iterate. Work with a limited dataset and a focused question. Test with domain experts, refine your prompts, and expand gradually. The strength of LLMs lies in this iterative approach: rapid experimentation without retraining from scratch.

Generative AI opens up enormous potential, but successful implementation requires more than access to a powerful model. It starts with well-structured data, clear processes, and realistic expectations.Organizations that focus on these fundamentals will be the ones to fully unlock the value of this transformative technology.

Als Medior Adviseur Bodem en Milieu bij TMC werk je aan uiteenlopende projecten waarbij bodemkwaliteit, milieuwetgeving en duurzaamheid centraal staan. Je adviseert over bodemonderzoeken, saneringen, hergebruik van grond en de milieukundige inp...

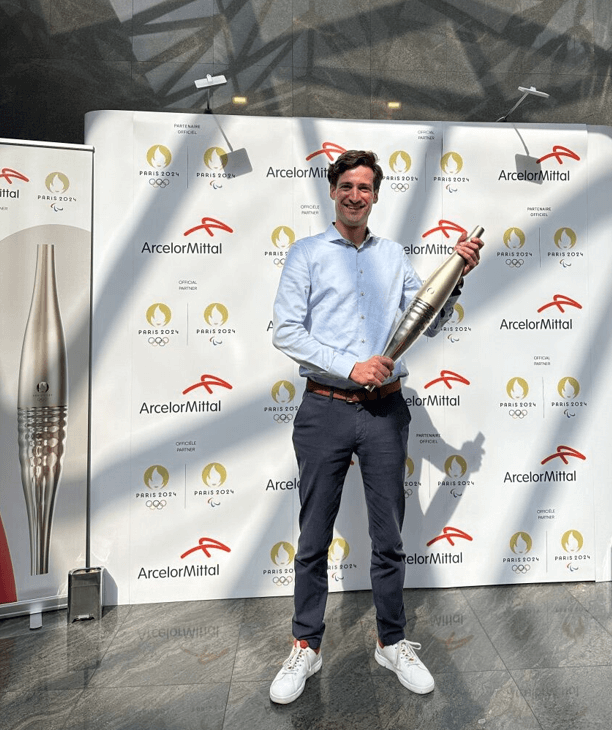

Reach out for opportunities, collaborations, or questions. We're here to connect.

![tmc_jurre_v7-[3]-[0-05-57-21].jpg tmc_jurre_v7-[3]-[0-05-57-21].jpg](https://www.themembercompany.com/cache/271965b9655f503d67ca404938438f12/tmc_jurre_v7-[3]-[0-05-57-21].jpg)